Elena Ierardi [Glasgow Caledonian University, Glasgow, United Kingdom; Azienda Unità Sanitaria Locale Piacenza, Piacenza, Italy]

Prof. J Chris Eilbeck [School of Mathematical and Computer Sciences and Maxwell Institute, Heriot-Watt University, Edinburgh, United Kingdom]

Prof. Frederike van Wijck [School of Health and Life Sciences, Glasgow Caledonian University, Glasgow, United Kingdom]

Dr. Myzoon Ali [NMAHP Research Unit, Glasgow Caledonian University, Glasgow, United Kingdom]

Fiona Coupar [Department of Occupational Therapy, and Human Nutrition and Dietetics, Glasgow Caledonian University, Glasgow, United Kingdom]

Systematic reviews are a well-established research method in healthcare, which aim to synthesise the best evidence to answer a specific research question, by using validated systematic methods [1]. Given their complex nature, systematic reviews are challenging due to the time, rigour and expertise needed [2]. Therefore, a reduction of workload and acceleration of screening for systematic reviews are the two main priorities [2].

Several studies have explored the applications of semi-automated tools to the screening of systematic search results, which involve searching through large amounts of data for specific information and are defined as ‘data mining’ [3]. We aimed to develop and validate a data mining-based method to facilitate screening of potentially eligible studies for an exemplar systematic review on upper limb motor impairment after stroke [4], and compare this with a standard method in terms of accuracy, eligibility, and decision time.

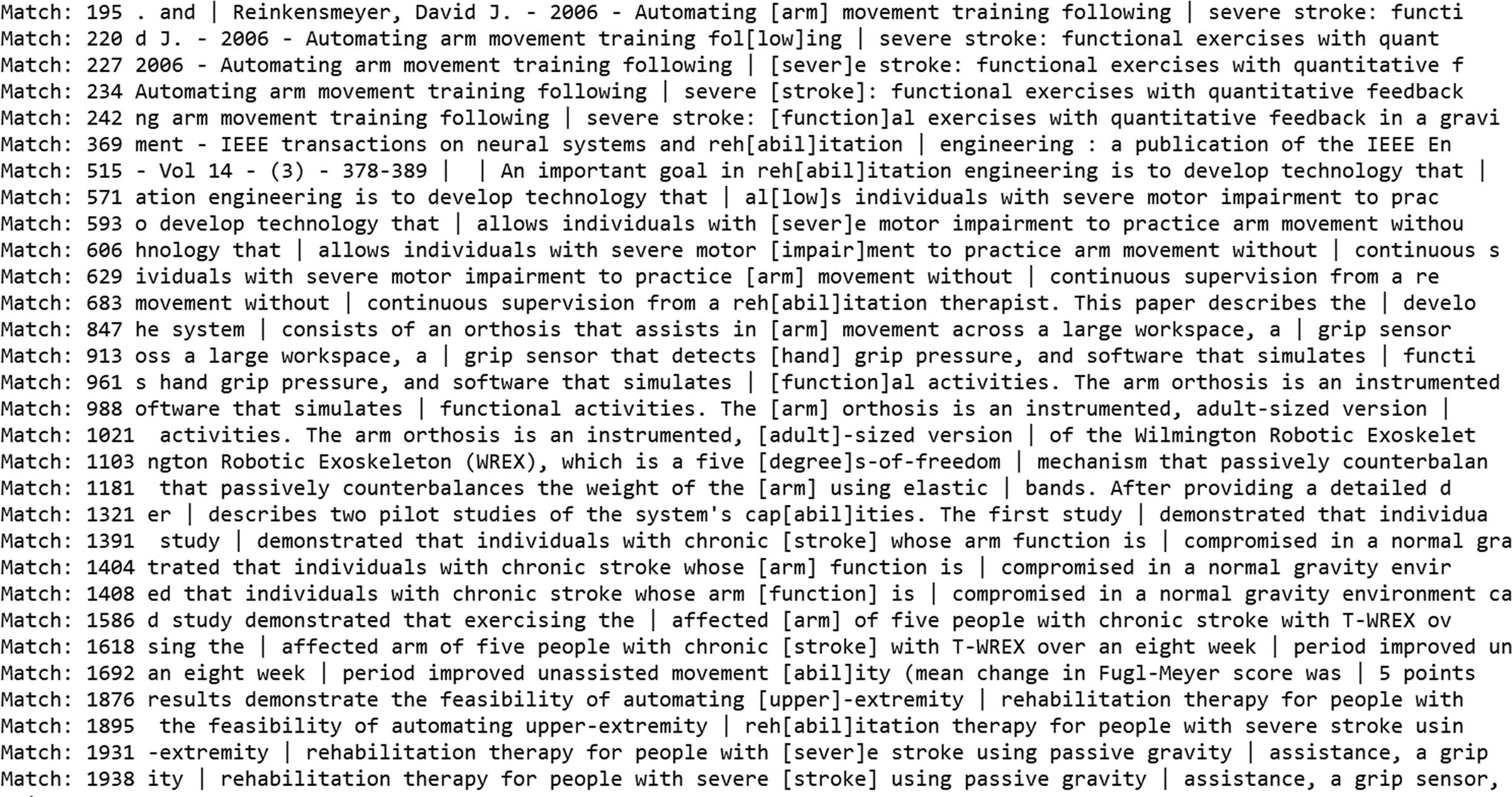

We developed a Python algorithm for keyword identification (text mining) within papers for a systematic review of upper limb motor impairment after stroke [4]. The algorithm was designed to be flexible so the keywords could be changed based on different eligibility criteria. It searched for given keywords in entire, previously identified PDF files and generated a text file (.txt) (output) including relevant information to make a decision regarding abstract eligibility (figure 1) [5]. The standard method involved manually reading each abstract for keywords. We firstly compared both methods in terms of keyword accuracy, eligibility, and decision time. Next, we undertook an external validation by adapting the algorithm for a different review and comparing studies included via the standard method with those included via the algorithm.

Both methods identified the same 610 studies for inclusion. For the exemplar systematic review, the algorithm failed to generate outputs on 72 out of 2,789 documents processed (2.6%). Reasons were: password-protected files (2.4% of scanned documents), image PDFs (0.1%), entire journal issues (0.1%). Based on a sample of 21 randomly selected abstracts, the standard screening took 1.58 ± 0.26 min. per abstract. Computer output screening took 0.43 ± 0.14 min. per abstract. The mean difference between the two methods was 1.15 min. (P < 0.0001), saving 73% of time per abstract. For the external validation, use of the algorithm resulted in the same studies being identified except for one which we excluded based on the interpretation of the comparison intervention. We made our algorithm freely available on GitHub for the purpose of future replications or improvements [6].

We designed and tested a purpose-built text mining algorithm by comparing it with the standard screening method in terms of accuracy in identifying correct keywords and eligible abstracts, as well as decision time. For external validation, we compared studies, identified as eligible using the algorithm, with those included in another review. Findings indicated that our novel text mining-based algorithm is a valid method for abstract screening and can significantly reduce timescale when undertaking systematic reviews. The algorithm is flexible and can be applied to other systematic reviews on any topic. It can be used, and improved, in the future – not only for authors but also for reviewers and editors.

[1] Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, et al. Cochrane handbook for systematic reviews of interventions 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook. [Accessed 28 June 2024]

[2] Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med 2010; 7:e1000326.

[3] O’Mara-Eves A, Thomas J, McNaught J, Miwa M, Ananiadou S. Using text mining for study identification in systematic reviews: a systematic review of current approaches. Syst Rev 2015; 4. doi: 10.1186/2046-4053-4-5

[4] Ierardi E, Coupar F, Ali M, van Wijck F. A systematic review of descriptors of levels of severity of upper limb activity limitation and impairment after stroke 2019. https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=226244. [Accessed 28 June 2024]

[5] Ierardi E, Eilbeck JC, van Wijck F, Ali M, Coupar F. Data mining versus manual screening to select papers for inclusion in systematic reviews: a novel method to increase efficiency. Int J Rehabil Res. 2023;46(3):284-292. doi:10.1097/MRR.0000000000000595

[6] ceilbeck/key_wd_PDF_search: searches a directory of PDF files to look for key words, and prints out results. n.d. https://github.com/ceilbeck/key_wd_PDF_search. [Accessed 28 June 2024]